Artificial intelligence (AI) has progressed fast. The past few years have turned what felt like sci-fi into real software that’s accessible, affordable, and already being used in boardrooms around the world.

If you’re a board director or executive with limited time, it can be difficult to separate the hype from the facts and know exactly what the risks are.

This guide aims to cut through the noise with a factual look at where generative AI is today and its role in preparing board packs that set directors up for success.

What is generative AI?

Before we dive into the use of AI to improve board packs, let’s get clear on the basics: what exactly is generative AI?

Generative AI is a type of artificial intelligence that can produce original content in response to a prompt. This includes everything from text and images to code, spreadsheets, presentations, and even music. Rather than simply retrieving information, it creates new material based on what it has learned from training data.

The best-known version of this is the large language model (LLM). These models, such as OpenAI’s GPT-4, Anthropic’s Claude, and Google’s Gemini, are trained on huge datasets of publicly available text. They learn to predict the next word in a sequence, allowing them to generate coherent, context-aware output.

For example, you can ask an LLM to summarize a long report or reword complex content for clarity. The results aren’t always perfect, but they’re often close enough to give you a valuable head start.

Modern LMMs like GPT-4o and Claude 3 go one step further. They offer multimodal capability, meaning they can process and reason across images, charts, PDFs, spreadsheets, and even audio. They can handle hundreds of pages of text at a time, making it possible to ask questions about an entire board pack. They also offer conversational fluency. You can interact with them in a natural back-and-forth way, asking follow-up questions, refining outputs, and clarifying intent.

These upgrades make generative AI a highly practical tool. It is rapidly becoming part of the workflow for directors and executives who need to digest complex board materials quickly and apply the information in a high-stakes context.

Pro tip: Read our guide "How generative AI works — and what it means for boards" for more details.

What makes today’s AI different from earlier tools?

It can be difficult to keep up with the pace of innovation in the AI industry. Over the past few years, three big changes have stood out:

- Scale: Models like GPT-4 Turbo can process over 300 pages of text in one go, so you can feed in an entire board pack and ask for a high-level summary.

- Multimodality: GPT-4o and other new models can understand and analyze tables, charts, PDFs, and audio as well as text. This makes them more useful in handling the varied formats found in board materials.

- Cost and accessibility: Running AI models used to be expensive. Now, tools are fast, cloud-based, and increasingly embedded into the platforms already used by boards, like Board Intelligence’s board portal.

Basically, these models are now business-ready, and the AI landscape is maturing fast. For boards, that means:

- Model upgrades: New models offer faster performance and can execute more complex tasks.

- Real case studies: Boards are already using AI tools like Board Intelligence’s Insight Driver to reduce preparation time and flag reporting gaps. This helps mitigate concerns and encourages wider adoption.

- Better integration: Board management software providers are embedding AI into trusted, configurable features designed for board and governance use cases.

This rapid progress presents exciting opportunities for boards and directors, but it also comes with risks. What should boards watch out for?

What AI risks are most relevant to boards?

AI isn’t perfect, and using it without clear oversight can lead to significant governance risks. There are three main issues for boards to keep in mind when assessing or making decisions about AI use:

1. Bias and fairness: AI recreates the biases it’s been trained on

AI models reflect the data they’re trained on, and if that data contains bias, so will the output. This is especially risky in decisions around people, risk, or customer policy.

In a stark illustration of the problem, Oxford researchers found that a popular image-generating AI tool could create pictures of white doctors providing care to black children but not of black doctors doing the same for white children.

What a biased AI generates when prompted for images of a “black African doctor helping poor and sick white children”. © Alenichev, Kingori, Grietens; University of Oxford

In 2023, the UK’s Centre for Data Ethics and Innovation called for boards to prioritize fairness and explainability in AI governance. Boards should require impact assessments for high-risk applications and request documentation on how model bias is mitigated.

2. Hallucination: AI makes up facts

AI models may sometimes generate false or misleading content with complete confidence. As Dr Haomiao Huang, an investor at renowned Silicon Valley venture firm Kleiner Perkins, puts it: “Generative AI doesn’t live in a context of ‘right and wrong’ but rather ‘more and less likely.’”

Often, that’s sufficient to generate an approximate answer. But sometimes that leads to entirely made-up facts. And in an ironic twist for computers, AI can particularly struggle with basic math.

An incorrect answer confidently served by ChatGPT. © Ars Technica

This can have real-world consequences. For example, in 2023, a lawyer submitted a legal brief that included fictitious case law invented by ChatGPT (read more here).

In a board context, incorrect figures or misattributed citations could lead to poor decision-making and land leaders in tricky legal waters. That’s why AI-generated summaries or recommendations must always be reviewed by a human.

3. Data leakage: AI leaks information

Uploading confidential materials to public AI tools (such as the free version of ChatGPT) can inadvertently expose sensitive company information by serving it up in response to prompts from other users. Imagine your CFO asking, “We want to acquire competitor X, what should I know about them?” and that information then being given to an outsider asking, “What’s up with company X?”

This isn't merely a hypothetical risk. In 2023, Samsung banned staff from using generative AI tools after engineers uploaded confidential source code to ChatGPT (read more here).

Running a private model within your organization will not mitigate this risk completely, as it would only stop leaks between your organization and outsiders, not between people within your organization. Imagine one of your colleagues, who isn’t on the board, asking, “What should I include in my report to impress the CFO?” and the AI replying, “The CFO is thinking of buying X, you should research that company.”

Boards should insist on using enterprise-grade AI platforms with proper security controls, including data isolation, encrypted storage, and audit logging.

What about regulation?

Mitigating these risks doesn’t mean you have to avoid AI; it means you need to use it responsibly. But boards can’t do this in a vacuum; to do it well, they also need to understand the evolving regulatory context.

Global AI regulation is catching up, and boards should be aware of emerging obligations:

- EU AI Act: The EU AI Act is the world’s first comprehensive AI regulation. It places clear rules on high-risk systems (such as those affecting employment, credit, or safety) and introduces transparency rules for general-purpose AI models like ChatGPT. Some obligations have already come into effect.

- US state laws: In the absence of federal regulation, US states are passing their own AI laws. For example, Colorado’s SB 24-205, passed in May 2024, requires organizations to conduct impact assessments, notify consumers of AI use, and take steps to prevent algorithmic discrimination.

- UK approach: The UK has taken a less prescriptive approach. Rather than legislating immediately, the government has issued guidance asking existing regulators (like the Financial Conduct Authority) to interpret AI risks within their own domains. But calls for stronger rules are growing, especially in financial services and critical infrastructure.

Boards should review their technology risk registers, identify where AI is used, and ensure compliance with the relevant frameworks. In high-risk use cases, consider conducting a Fundamental Rights Impact Assessment (FRIA), a tool used in Europe to evaluate how AI systems might affect individuals’ rights and freedoms. The Council of Europe offers a useful guide.

How can board directors use AI?

With regulators’ increasing focus on AI, and the limitations and risks we’ve discussed above, you might think that AI is inherently flawed and has no place in your board pack. Well, that’s only half correct.

Generative AI cannot yet be trusted to author board material or exercise the careful judgment expected of directors. But it can shine as a critical thinking partner and editor, where its ability to analyze and rephrase text can nudge directors and report writers in the right direction, and act as an on-call sparring partner.

For example, from a director’s perspective, AI can help with:

1. Summarizing long reports

This is perhaps the most obvious application for boards: feed in a long board report or policy document and ask the AI to generate a summary. If the tool has been designed by engineers who understand how boards work, you could also ask it to flag anything needing board-level attention, or pick up points that deal with a particular theme or board priority. Insight Driver, a feature within Board Intelligence's board portal, has been designed to do all of this.

2. Spotting gaps or bias

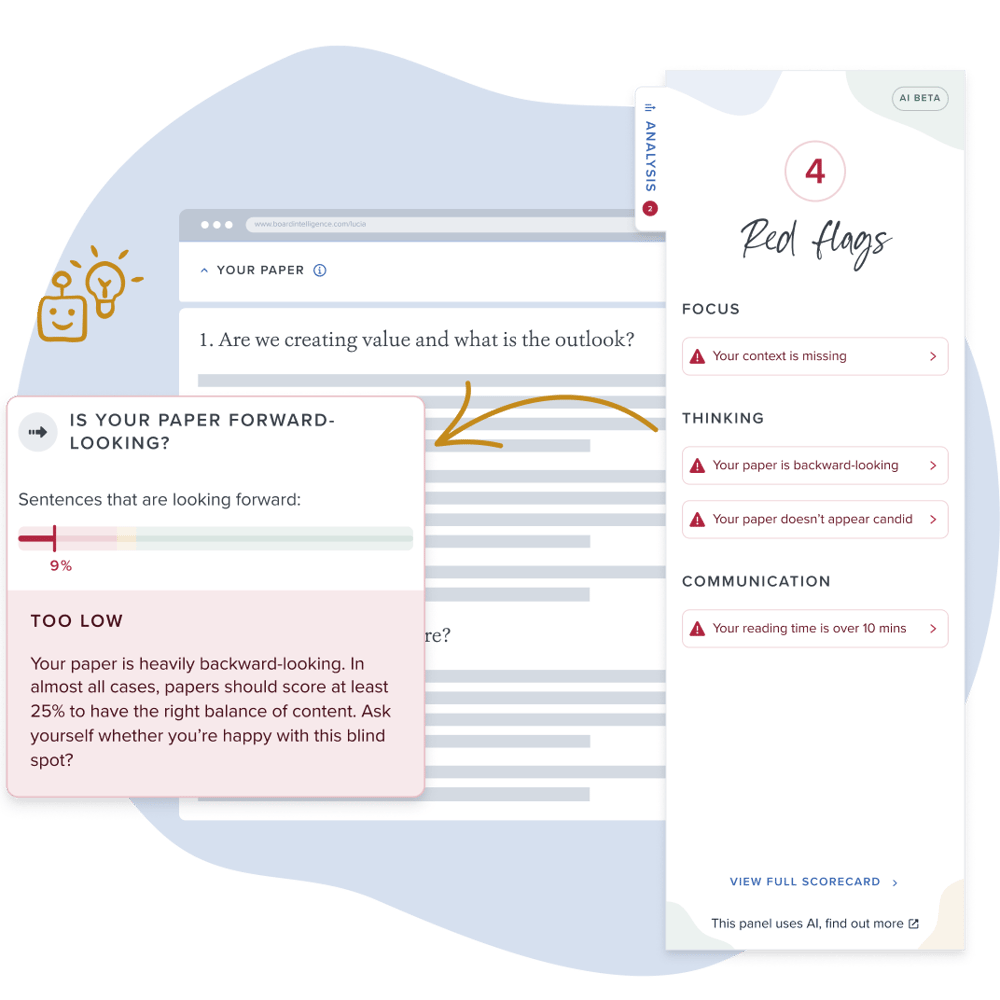

If your AI tool knows what makes an effective board pack and the insights your directors need, it can identify the blind spots in analysis and recommendations. For example, it can pick up if a proposal hasn't outlined alternative options or considered the impact a plan will have on key stakeholders.

3. Scanning for risks and opportunities

Point AI at your board pack (and external data) to identify emerging opportunities or risks. It can categorize them by committee relevance and surface issues before they become more pressing.

4. Producing minutes and actions

When the board meeting is over, you can use AI to create action lists and a solid first draft of your meeting minutes, improving accuracy and saving your governance team time.

How can AI be used in board packs?

These examples are just a starting point. In addition to saving time and reducing the preparation burden, AI can be used to fix the inputs: to improve the quality of board papers and ensure that the information being served up to directors is rich in relevant insight. Boards are in serious need of this, with only 36% of directors thinking their board pack adds value and 68% rating their board materials as “weak” or “poor”.

To explore where AI can help, let's dive a bit deeper into the two core components of the board pack writing process: critical thinking and great writing.

1. Stimulating critical thinking with AI

Effective board papers don’t just share information. They surface breakthrough insights and game-changing ideas, and they stimulate both the author's and the reader’s thinking.

AI can help your report writers deliver this by letting them know if they’re falling into common critical thinking traps and nudging them towards more actionable insights and plans. For example:

- Does the content of your report link to the organization’s big picture?

- Are you providing insight or just information?

- Is your analysis sufficiently forward-looking?

- Are you sharing information candidly, or massaging the message?

When issues are flagged, it’s up to the writer to decide how to act on that feedback. For example, maybe the bad news hasn’t been omitted, and the report looks overly optimistic simply because last quarter was great. As the subject matter expert, the writer will have more context than the AI. What matters is that the AI challenged the thinking process and pointed out potential gaps, helping produce a more robust paper and sharper insights for the board to act on.

Board Intelligence’s AI-powered management reporting software, Lucia, provides real-time prompts and feedback that challenge your team to think more deeply as they write.

2. Guiding great writing with AI

Critical thinking is only half the battle, and great information won’t do much good if the reader can’t easily process it. AI can help here, too, by asking questions that drive better communication:

- Has an executive summary been included?

- Are sentences short and concise, or long and rambling?

- Is the vocabulary simple or jargon-heavy?

Because generative AI excels at modifying existing text and “translating” it from one representation into another, it can help fix these issues with the click of a button. For example, it can extract the report’s key points and use them to draft a best practice executive summary.

Questions every board should ask about AI

To effectively and responsibly harness AI, boards must ask the right questions about it. We’d recommend asking the following five questions:

- Are we using AI in our board reporting? If so, where are we using it and what are we trying to achieve?

- How are we validating its outputs?

- Who’s accountable for errors or misuse?

- What’s our plan for model monitoring and retraining?

- Are we keeping a record of prompts and outputs for audit purposes?

Good governance is critical here. AI can help the board make better decisions, but only if it’s used with transparency and oversight.

Next steps: Bringing AI into your board pack

To start using AI in your board pack, you needn’t overhaul your entire process. Start with small steps:

- Choose one or two areas where AI can save time, like summarizing reports or drafting meeting minutes.

- Use tools that are purpose-built for board materials, with the right security and audit controls.

- Ensure that human review is a part of the process, to catch any issues early and build trust in the outputs.

Board Intelligence’s AI tools are built for this. For example, take Lucia, our AI-powered report writing tool. Lucia's design is rooted in best practice reporting methodology, the QDI Principle, meaning it helps management prepare board materials that hit the mark. It’s also designed with the controls expected by boards, so it delivers the insight that directors need in a secure format that's easy to read and engage with.

Want to try it for yourself?

Find out more about our AI tools or book a demo to see how Board Intelligence's AI-powered board management and board reporting tools can set your board up for success.